Request and Response Structure

This page describes the request and response structure of the Liveness API.

Images

The images is a required JSON-formatted array for the image liveness detection API. Each item includes an index, an image type, and a decoded image data to address various input types and models.

About image storageIn Liveness, user uploaded image file or image data will not be stored anywhere in any condition. Image data in base64 encoding is disposed after liveness detection.

Index

An index is a required integer field to represent the order of an image item. Since Liveness is able to deal with various types of models, it is necessary to address the sequence of multiple input images. The index in a request should always start with 0 and incremental by integer. Sending extra number of images will be ignored by the service side and will not lead to errors. Refrain from doing that as it will increase the burden of both the request side and the response side.

Type

A Type is a required string field to represent the type of an image item. Since Liveness is able to deal with various types of models, it is necessary to address the type of multiple input images. Here is the list of image types. The list will be extended by the introduction of new models.

| Name | Description |

|---|---|

| RGB | A colored image taken by RGB cameras |

Data

All image data sent via APIs should be base64 encoded. Liveness does not provide this function. Please implement it in your services. Here we provide two simple examples of base64 encoding.

import base64

# Read the file and convert the binary to a base64 string

def base64_encode_file(file_path):

handle = open(file_path, "rb")

raw_bytes = handle.read()

handle.close()

return base64.b64encode(raw_bytes).decode("utf-8")$ base64 file_pathThe encoded string should be put in the images[i].data field as a string value. Missing it or leaving it blank will lead to errors. Here is a shortened example.

{

"images": [

{

"index": 0,

"type": "RGB",

"data": "/9j/4AAQSkZJRgAB="

}

]

}Auto rotation

Resource-consuming processThis operation consumes extra resources than a standard request, leading to higher latency.

Please use it only when you understand the function thoroughly.

Images sometimes include orientation information in their Exif (Exchangeable image file format) metadata. OS will usually automatically adjust the orientation according to the Exif information when displaying the image, causing a misconception that the image is in the portrait orientation. If this image is encoded and sent to the service, the model usually cannot recognize it and return a face not found error. Possibly, the model accepts the wrong-oriented image and gets wrongly processed. Recognition accuracy cannot be guaranteed under those circumstances.

Exif with rotation information

To avoid inputting a wrong-oriented image, you can specify the autoRotate field as true when posting the image. The system will automatically detect and process the image in the correct orientation.

Use auto rotation when the image is sent from the end-userSuppose your system or application has no control over the inputting image or does not have any pre-processing capabilities. We recommend enabling this function when posting the image.

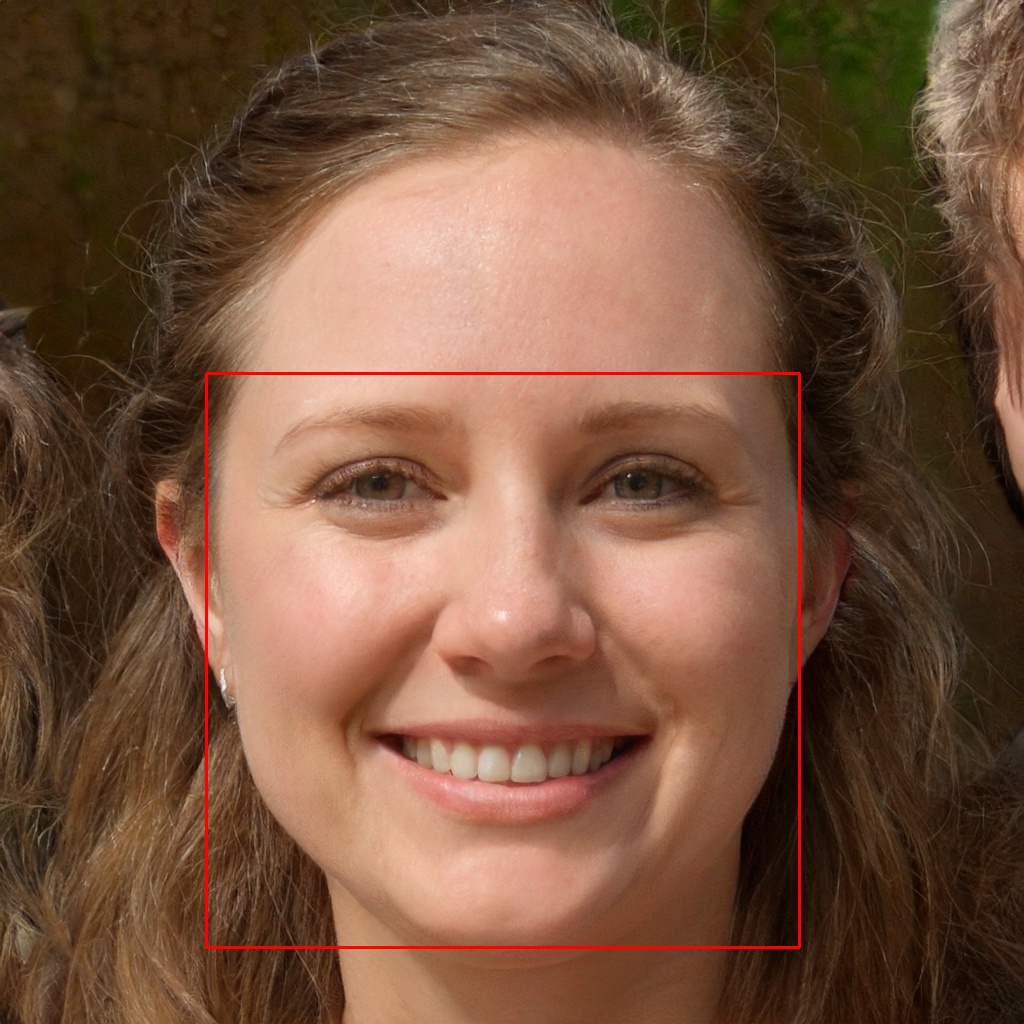

Position

The position field is always in the response body. It contains four integer values to position the face used for liveness detection. The top and the left values stand for the top coordinate point's pixel values. With the width and the height values, it is possible to get a bounding box of where the face area is located in the image.

Positioning bounding box

As Liveness could accept multiple faces included in a single image, it is extremely important to understand which face has been proceeded with the help of position. Since the face detection logics of AnySee and Liveness are the same as they both detect and proceed with the largest face in the image only, it is safe to simply use the same image in both services.

In case of uploading multiple images in a single request, the position only represents the face position of the first input image (where index is 0).

Updated 9 months ago